1.完整项目描述和程序获取

>面包多安全交易平台:https://mbd.pub/o/bread/ZJaWmpxx

>如果链接失效,可以直接打开本站店铺搜索相关店铺:

>如果链接失效,程序调试报错或者项目合作也可以加微信或者QQ联系。

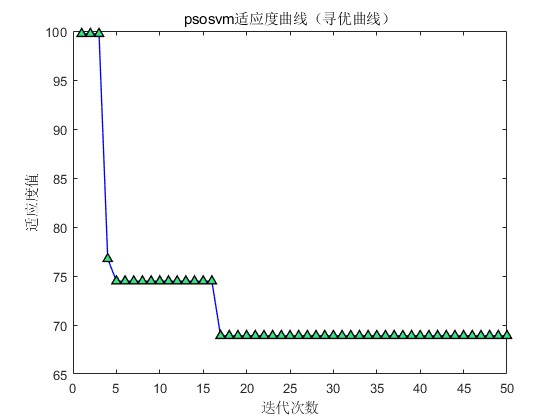

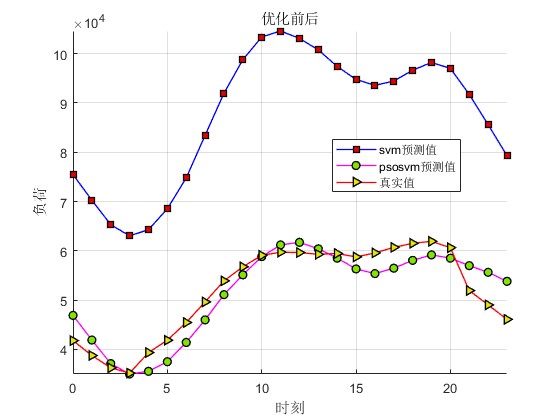

2.部分仿真图预览

3.算法概述

支持向量机(support vector machines, SVM)是二分类算法,所谓二分类即把具有多个特性(属性)的数据分为两类,目前主流机器学习算法中,神经网络等其他机器学习模型已经能很好完成二分类、多分类,学习和研究SVM,理解SVM背后丰富算法知识,对以后研究其他算法大有裨益;在实现SVM过程中,会综合利用之前介绍的一维搜索、KKT条件、惩罚函数等相关知识。本篇首先通过详解SVM原理,后介绍如何利用python从零实现SVM算法。

4.部分源码

..............................................................

Vcmax = pso_option.k*pso_option.popcmax;

Vcmin = -Vcmax ;

Vgmax = pso_option.k*pso_option.popgmax;

Vgmin = -Vgmax ;

%% 产生初始粒子和速度

for i=1:pso_option.sizepop

% 随机产生种群和速度

pop(i,1) = (pso_option.popcmax-pso_option.popcmin)*rand+pso_option.popcmin;

pop(i,2) = (pso_option.popgmax-pso_option.popgmin)*rand+pso_option.popgmin;

V(i,1)=Vcmax*rands(1,1);

V(i,2)=Vgmax*rands(1,1);

% 计算初始适应度

fitness(i)=fit_function(pop(i,:),X,Y,Xt,Yt);

end

% 找极值和极值点

[global_fitness bestindex]=min(fitness); % 全局极值

local_fitness=fitness; % 个体极值初始化

global_x=pop(bestindex,:); % 全局极值点

local_x=pop; % 个体极值点初始化

% 每一代种群的平均适应度

avgfitness_gen = zeros(1,pso_option.maxgen);

%% 迭代寻优

for i=1:pso_option.maxgen

for j=1:pso_option.sizepop

%速度更新

V(j,:) = pso_option.wV*V(j,:) + pso_option.c1*rand*(local_x(j,:) - pop(j,:)) + pso_option.c2*rand*(global_x - pop(j,:));

% 边界判断

if V(j,1) > Vcmax

V(j,1) = Vcmax;

end

if V(j,1) < Vcmin

V(j,1) = Vcmin;

end

if V(j,2) > Vgmax

V(j,2) = Vgmax;

end

if V(j,2) < Vgmin

V(j,2) = Vgmin;

end

%种群更新

pop(j,:)=pop(j,:) + pso_option.wP*V(j,:);

%边界判断

if pop(j,1) > pso_option.popcmax

pop(j,1) = pso_option.popcmax;

end

if pop(j,1) < pso_option.popcmin

pop(j,1) = pso_option.popcmin;

end

if pop(j,2) > pso_option.popgmax

pop(j,2) = pso_option.popgmax;

end

if pop(j,2) < pso_option.popgmin

pop(j,2) = pso_option.popgmin;

end

% 自适应粒子变异

if rand>0.8

k=ceil(2*rand);

if k == 1

pop(j,k) = (pso_option.popgmax-pso_option.popgmin)*rand + pso_option.popgmin;

end

if k == 2

pop(j,k) = (pso_option.popgmax-pso_option.popgmin)*rand + pso_option.popgmin;

end

end

%适应度值

fitness(j)= fit_function(pop(j,:),X,Y,Xt,Yt);

%个体最优更新

if fitness(j) < local_fitness(j)

local_x(j,:) = pop(j,:);

local_fitness(j) = fitness(j);

end

if fitness(j) == local_fitness(j) && pop(j,1) < local_x(j,1)

local_x(j,:) = pop(j,:);

local_fitness(j) = fitness(j);

end

%群体最优更新

if fitness(j) < global_fitness

global_x = pop(j,:);

global_fitness = fitness(j);

end

end

fit_gen(i)=global_fitness;

avgfitness_gen(i) = sum(fitness)/pso_option.sizepop;

end

%% 输出结果

disp(['最优解为: ',num2str(global_fitness),' 对应的最优gam与sig为:',num2str(global_x)]);

y=global_x;

A473