1.完整项目描述和程序获取

>面包多安全交易平台:https://mbd.pub/o/bread/Y56Vkpdp

>如果链接失效,可以直接打开本站店铺搜索相关店铺:

>如果链接失效,程序调试报错或者项目合作也可以加微信或者QQ联系。

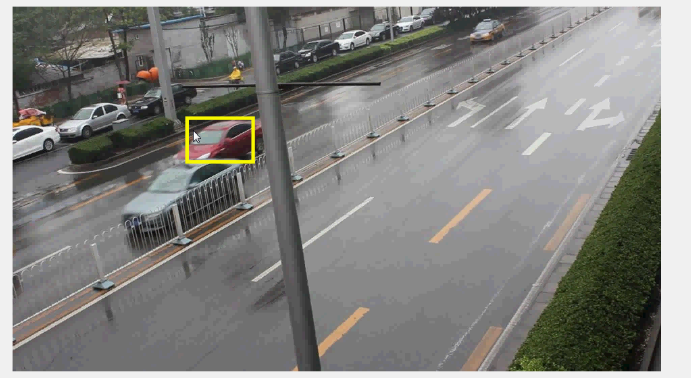

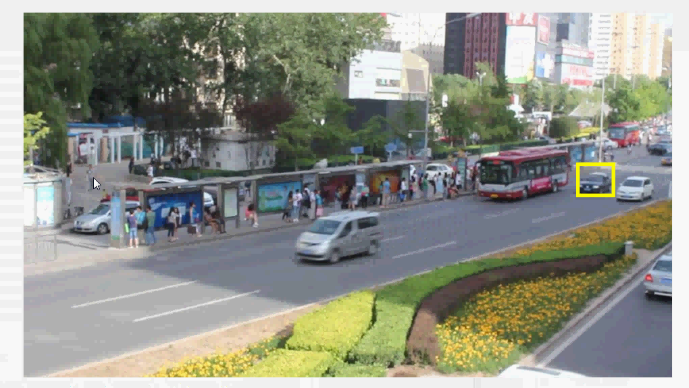

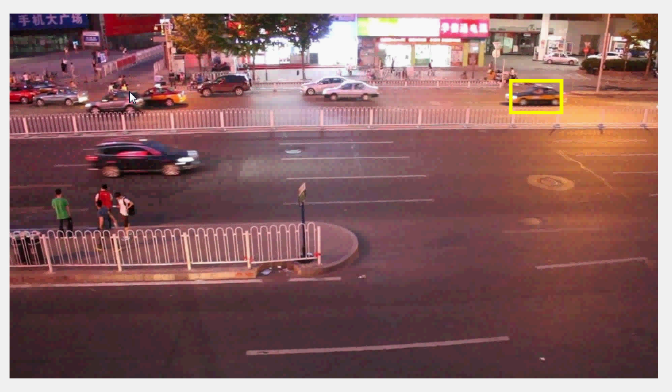

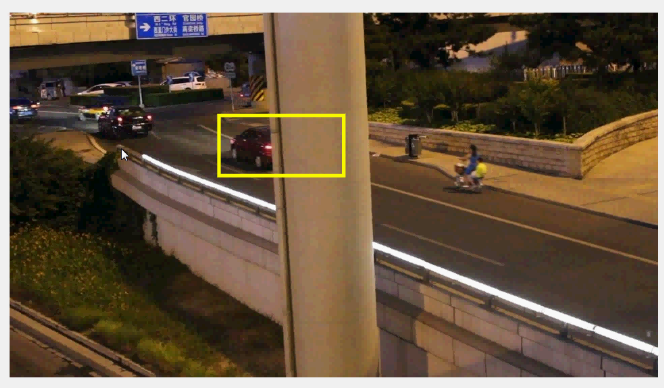

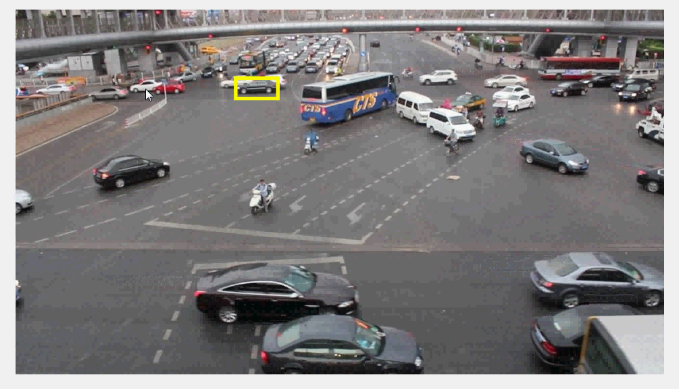

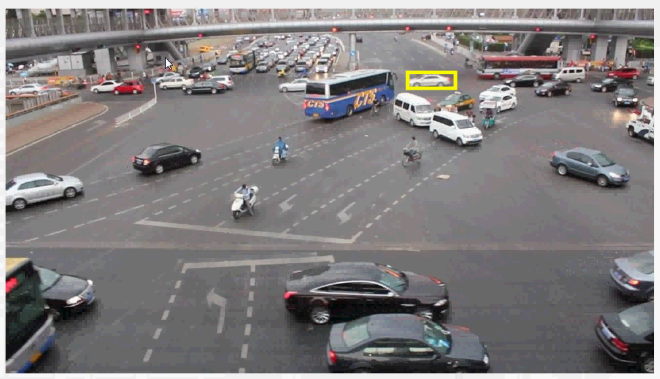

2.部分仿真图预览

3.算法概述

全卷积神经网络(Fully Convolutional Networks,FCN)是Jonathan Long等人于2015年在Fully Convolutional Networks for Semantic Segmentation一文中提出的用于图像语义分割的一种框架,是深度学习用于语义分割领域的开山之作。我们知道,对于一个各层参数结构都设计好的神经网络来说,输入的图片大小是要求固定的,比如AlexNet,VGGNet, GoogleNet等网络,都要求输入固定大小的图片才能正常工作。而 F C N 的 精 髓 就 是 让 一 个 已 经 设 计 好 的 网 络 可 以 输 入 任 意 大 小 的 图 片 \color{blue}{而FCN的精髓就是让一个已经设计好的网络可以输入任意大小的图片}而FCN的精髓就是让一个已经设计好的网络可以输入任意大小的图片。

4.部分源码

...................................................................

rectPosition = [targetPosition([2,1]) - targetSize([2,1])/2, targetSize([2,1])];

data0 = [rectPosition(1);rectPosition(2)];

kalman_state = 0;

dist=0;

X0_new=rectPosition(1);

Y0_new=rectPosition(2);

kalman_start=0;

kalman_start2=0;

kalman_start3=0;

C=960;

R=540;

rc=R/C;

Virx2_=0;

Viry2_=0;

%初始变量大小

S0 = rectPosition(3)*rectPosition(4);

S1 = R*C;

div= S0/S1;

div2=1;

speed=0;

flag = 0;

bw=110;

for i = startFrame:nImgs

if i>startFrame

% load new frame on GPU

im = gpuArray(single(imgFiles{i}));

bw = mean2(mean(double(im)));

% if grayscale repeat one channel to match filters size

if(size(im, 3)==1)

im = repmat(im, [1 1 3]);

end

scaledInstance = s_x .* scales;

scaledTarget = [targetSize(1) .* scales; targetSize(2) .* scales];

% extract scaled crops for search region x at previous target position

x_crops = make_scale_pyramid(im, targetPosition, scaledInstance, p.instanceSize, avgChans, stats, p);

% evaluate the offline-trained network for exemplar x features

[newTargetPosition, newScale] = tracker_eval(net_x, round(s_x), scoreId, z_features, x_crops, targetPosition, window, p);

targetPosition = gather(newTargetPosition);

% scale damping and saturation

s_x = max(min_s_x, min(max_s_x, (1-p.scaleLR)*s_x + p.scaleLR*scaledInstance(newScale)));

targetSize = (1-p.scaleLR)*targetSize + p.scaleLR*[scaledTarget(1,newScale) scaledTarget(2,newScale)];

%分析黄色方框内的图像信息

x0 = round(rectPosition(1));

y0 = round(rectPosition(2));

w = round(rectPosition(3));

h = round(rectPosition(4));

imsub{i} = imgFiles{i}(max(y0,1):min(y0+h,R),max(x0,1):min(x0+w,C),:);

else

% at the first frame output position and size passed as input (ground truth)

end

rectPosition = [targetPosition([2,1]) - targetSize([2,1])/2, targetSize([2,1])];

if i == 1

div0 = targetSize(1)*targetSize(2);

else

div = sqrt(targetSize(1)*targetSize(2)/div0); %放大倍数,用来修正预测速度和坐标

end

%计算跟踪目标的几何中心位置

Xcenter(i) = rectPosition(1);

Ycenter(i) = rectPosition(2);

if i > 1

dist = sqrt((Xcenter(i)-Xcenter(i-1))^2 + (Ycenter(i)-Ycenter(i-1))^2);

if kalman_start == 0

Virx2(i) = dist;

else

Virx2_ = mean(Virx2);

end

end

if i > 1

dist = sqrt((Xcenter(i)-Xcenter(i-1))^2 + (Ycenter(i)-Ycenter(i-1))^2);

dist2(i)= dist;

end

%遮挡判决条件,进行改进,取消原来距离的判决,改为距离和目标大小收缩参数结合的判决方式。

...............................................................................

%状态切换

if i<=10;%前十帧强制进行训练,作为卡尔曼的输入,不管有没有遮挡,否则效果会变差

X1(i) = rectPosition(1);

Y1(i) = rectPosition(2);

Tt(i) = i;

rectPosition(1:2) = [Xcenter(i);Ycenter(i)];

W = rectPosition(3);

H = rectPosition(4);

end

if i>10;%大于10的时候,进行遮挡判决,没遮挡的时候,继续输入卡尔曼作为训练数据

if kalman_start == 1

[Xnew(i),Xnew2(i)] = func_kalman_predict([X1],Tt,1);

[Ynew(i),Ynew2(i)] = func_kalman_predict([Y1],Tt,1);

%启动卡尔曼滤波进行预测估计

rectPosition(1:2) = [Xnew(i);Ynew(i)];

rectPosition(3:4) = [W;H];

%记忆特性保存间隔

X1=[X1(1:end),rectPosition(1)];

Y1=[Y1(1:end),rectPosition(2)];

Tt(i) = i;

else

X1(i) = rectPosition(1);

Y1(i) = rectPosition(2);

Tt(i) = i;

rectPosition(1:2) = [X1(i);Y1(i)];

W = rectPosition(3);

H = rectPosition(4);

end

end

...............................................................................

end

05_064_m